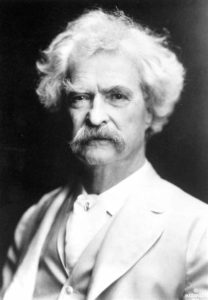

As both a trial attorney and the author of novels, I have learned to heed the words of Mark Twain, who wrote: “Truth is stranger than fiction, but it is because fiction is obliged to stick to possibilities. Truth isn’t.”

We’ve all heard remarkable true stories about amazing coincidences (twins separated at birth and reunited in their forties on a train from Paris to Nice when the puzzled conductor tells one of them that he’s already taken her ticket two cars back) and feats of superhuman strength (the 110-pound mother who somehow stops a rolling driverless one-ton pickup truck before it runs over her baby). Ah, but try to use one of those scenes for the climax of your novel and your editor will reject it. “But it really happened,” you protest. “Who cares? This is fiction. Your readers won’t buy it.”

But the boundary between truth and fiction gets blurry—and frightening—in the realm of Artificial Intelligence. Which brings us to the disturbing true tale of the stunning courtroom blunder by an earnest but naïve trial attorney who relied on ChatGPT to help draft his response to the defendant’s motion to dismiss.

In this blog post for my law firm, I explain the scary deeper lesson of how that attorney learned the hard way that Artificial Intelligence, when relied upon as your co-counsel, could better be described as Artificial Stupidity, or even Genuine Mendacity.